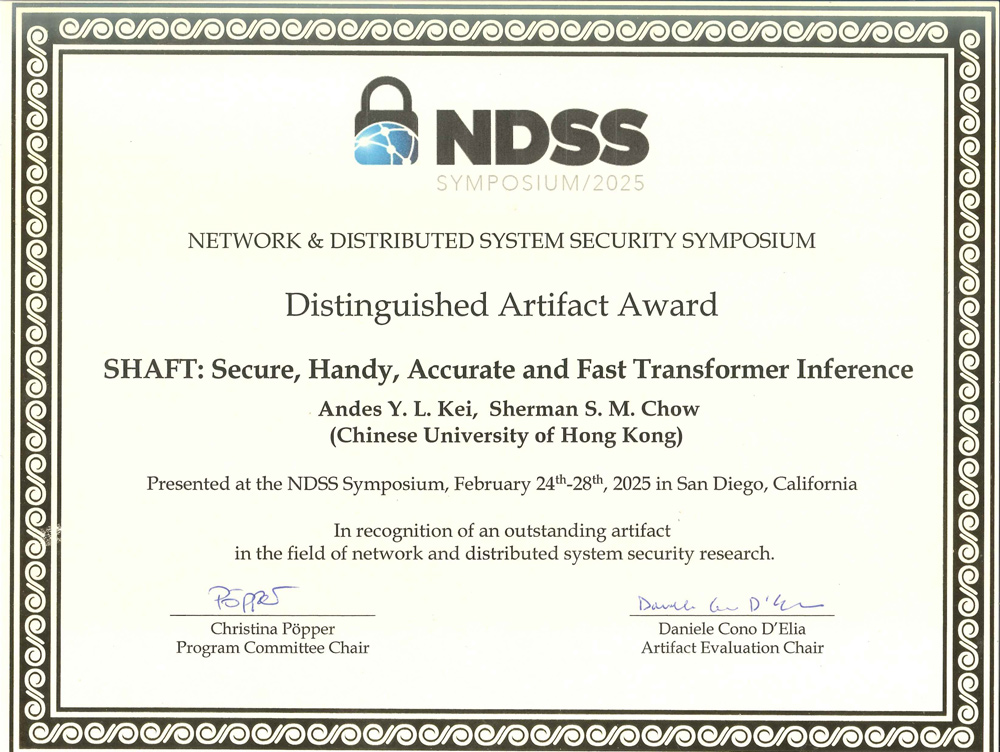

We are pleased to share that the paper “SHAFT: Secure, Handy, Accurate, and Fast Transformer Inference” by Andes Kei (IE PhD student and Hong Kong PhD Fellowship Scheme recipient) and his advisor, Prof. Sherman Chow, has received the Distinguished Artifact Award at the Network and Distributed System Security (NDSS) Symposium 2025. NDSS is a leading security conference. The artifact evaluation process, established in 2024, selected three awards from 68 submissions this year.

SHAFT is an efficient private inference framework for transformer, the foundational architecture of many state-of-the-art large language models. The team proposed cryptographic protocols that perform key transformer computations on encrypted data. SHAFT supports secure inference for large language models by seamlessly importing pretrained models from the popular Hugging Face transformer library via ONNX, an open standard for representing neural networks. This functionality provided by the artifact enables the machine learning community to deploy private inference on a wide range of models, even without specialized cryptographic expertise.